Your organization has successfully been using RTC for work items and planning, and you’re now ready to move your source control and build as well to take advantage of the full capabilities available to you and reap the benefits of an all-in-one tool. Where do you begin?

Two of the biggest design decisions you will face when migrating your code will be how to logically organize your source into components, and how many streams you will need to properly flow your changes from development to production. You will need to consider things like common code, access control, and the recommended best practice of limiting the number of files in your component to approximately 1000 (500 for earlier releases). You may decide to enlist the help of IBM or a business partner to assist you in devising your strategy.

Once you’ve devised your component and stream strategy, you’ll need to organize your source data sets and prepare for zimport. You can read a bit about zimport in my earlier post, Getting my MVS files into the RTC repository (and getting them back out again). At this point, best practice dictates that you do the following:

1. Import your source to your highest level stream (i.e., production). You may choose to do this as a series of zimports in order to create a history of your major releases to be captured in RTC.

2. Perform a dependency build at the production level. Dependency build creates artifacts known as build maps, one for each program, to capture all of the inputs and outputs involved in building a program. These maps are used in subsequent builds to figure out what programs need to be re-built based on what has changed. This initial build at the production level will build all of your programs, serving two purposes: (1) to prove you have successfully imported your source and you are properly configured to build everything and avoid surprises down the road and (2) to create build maps for all of your programs so that going forward during actual development you will only build based on what has changed.

3. Component promote your source and outputs down through your hierarchy (e.g, production -> QA -> test -> development). This will populate your streams and propagate your build maps down through each level to seed your development-level dependency build. Note that regardless of if you are going to build at a given level (e.g., test), you still need the build maps in place at that level for use in the promotion process.

Once these steps are complete, actual development can begin. Your developers can start delivering changes at your lowest (development) level, build only what’s changed, and use work item promotion to propagate your changes up through your hierarchy to the production level. Each time you begin work on a new release, you will again use component promotion to seed that new release (source code and build artifacts) from the production level.

Great! Except, if you’re like most users, one sentence above has left you reeling: This initial build at the production level will build all of your programs. You want me to do WHAT?! Re-build EVERYTHING?? Yep. For the reasons stated above. But the reality is that this may not be practical or even feasible for a number of reasons. So let’s talk about your options.

Hopefully your biggest objection here is that you don’t want a whole new set of production-level modules, when your current production modules are already tested and proven. No problem! Simply perform the production dependency build to prove out your build setup and generate your build maps, and then throw away all of the build outputs and replace them with your current production modules. This is actually the recommended migration path. You will simply need to use the “Skip timestamp check when build outputs are promoted” option when you are component promoting down (but don’t skip it when you work item promote back up). Also ensure that your dependency builds are configured to trust build outputs. This is the default behavior, and allows the dependency build to assume that the outputs on the build machine are the same outputs that were generated by a previous build. When this option is turned off, the dependency build checks for the presence of the build outputs and confirms that the timestamp on each output matches the timestamp in the build map. A non-existent build output or a mismatched timestamp will cause the program to be rebuilt.

Ideally you are satisfied and can follow the recommended path of building everything and replacing the outputs with your production modules. However, this may not be the case, so let’s explore a few other possible scenarios and workarounds:

1. Issue: Some of my programs need changes before they can be built, and it’s not feasible to do all of that work up-front before the migration.

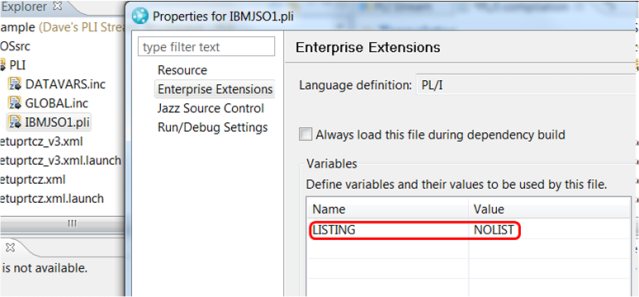

Workaround: Assign your unbuildable programs a language definition with no translator. We will not consider these programs buildable and they will be ignored during dependency build. When you are ready to update the programs, assign them a proper language definition at that time.You can also use NO language definition on your unbuildable program if you’re not using default language definitions (i.e. language definitions assigned based on file extension). In this case, the file will also not be scanned. Note: The approach of adding a language definition after the initial build is broken in V4 on, and a fix is currently targeted for 4.0.2. See the defect new file is not built if Lang def is assigned after 1st build (243516) for details.

2. Issue: All those copies of outputs at each level in my hierarchy are just taking up space. I don’t want them there.

Workaround: You can modify the promotion script to promote the build maps but not copy the outputs themselves. Again, ensure that trust build outputs is true (default) in your dependency build definitions. If you are building at a level where you don’t have outputs, ensure that your production libraries are included in the SYSLIB in your translators.

Follow these steps to utilize this workaround:

1. Copy generatedBuild.xml from a promotion result to the host.

2. In the Promotion definition, on the z/OS Promotion tab, choose “Use an existing build file”.

3. Specify the build file you created in step 1.

4. For build targets, specify “init, startFinalizeBuildMaps, runFinalizeBuildMaps” without the quotes.

3. Issue: I refuse to build all of my programs. That’s ridiculous and way too expensive.

Workaround: Seed the dependency build by creating IEFBR14 translators. This will give you the build maps you need without actually building anything. Then switch to real translators. There is a major caveat here: Indirect dependencies are not handled automatically until the depending program is actually built. For example, if you have a BMS map that generates a copybook that is included by a COBOL program, the dependency of the COBOL program on the BMS map is not discovered until the COBOL program actually goes through a real build. If you can accept this limitation, one approach to this workaround is as follows:

1. Create two sets of translators: your real translators, and one IEFBR14 translator per original translator to make sure there are no issues with SYSLIBs changing when you switch from IEFBR14 to real translators.

2. Use build properties in your language definition to specify translators, and set those properties in the build definition.

3. Request the build with the properties pointing to the IEFBR14 translators. Everything “builds” but no outputs are generated.

4. Change all of the translator properties in the build definition to point at the real translators.

5. Request another build and see that nothing is built.

This approach again requires that we trust build outputs so we don’t rebuild based on none of the load modules listed in the build maps actually existing.

With any of these approaches, it’s essential that you test out the full cycle (build at production, component promote down, deliver various changes — e.g., a main program, a copybook, a BMS map, an ignored change, etc — at development, build the changes, work item promote the changes up) on a small subset of your programs to ensure that your solution works for your environment and situation before importing and building your full collection of programs.